PCI DSS 4.0 is the most significant update to the standard in over a decade. For merchants, it means higher expectations and more ongoing scrutiny. The new version calls for clearer scoping, stronger monitoring, and continuous validation of controls. In practice, that often translates into expanding scope, heavier evidence requests from auditors, and release freezes while compliance teams scramble to gather documentation.

The practical effect is that PCI compliance consumes more time and resources than ever before. Systems that touch cardholder data are pulled into scope, even if they only interact with it occasionally. Logs must be produced, access controls reviewed, and retention policies enforced. For global merchants running payments across web, mobile, and call center channels, scope creep can feel relentless. Every year, audit season brings the same disruption.

This article will break down what’s changed in PCI DSS 4.0, explain how tokenization helps shrink scope, and show how it maps to core control areas. We’ll also cover network tokens and account updater services, and highlight practical steps merchants can take to simplify compliance and make evidence collection a steady routine rather than a disruptive scramble.

PCI DSS 4.0: What’s changed and why it matters

PCI DSS 4.0 keeps the spirit of earlier versions but changes how merchants prove compliance. The standard is no longer about passing a point-in-time audit – it’s about showing that controls work every day. For large enterprises, that means compliance feels broader, deeper, and harder to treat as a once-a-year project.

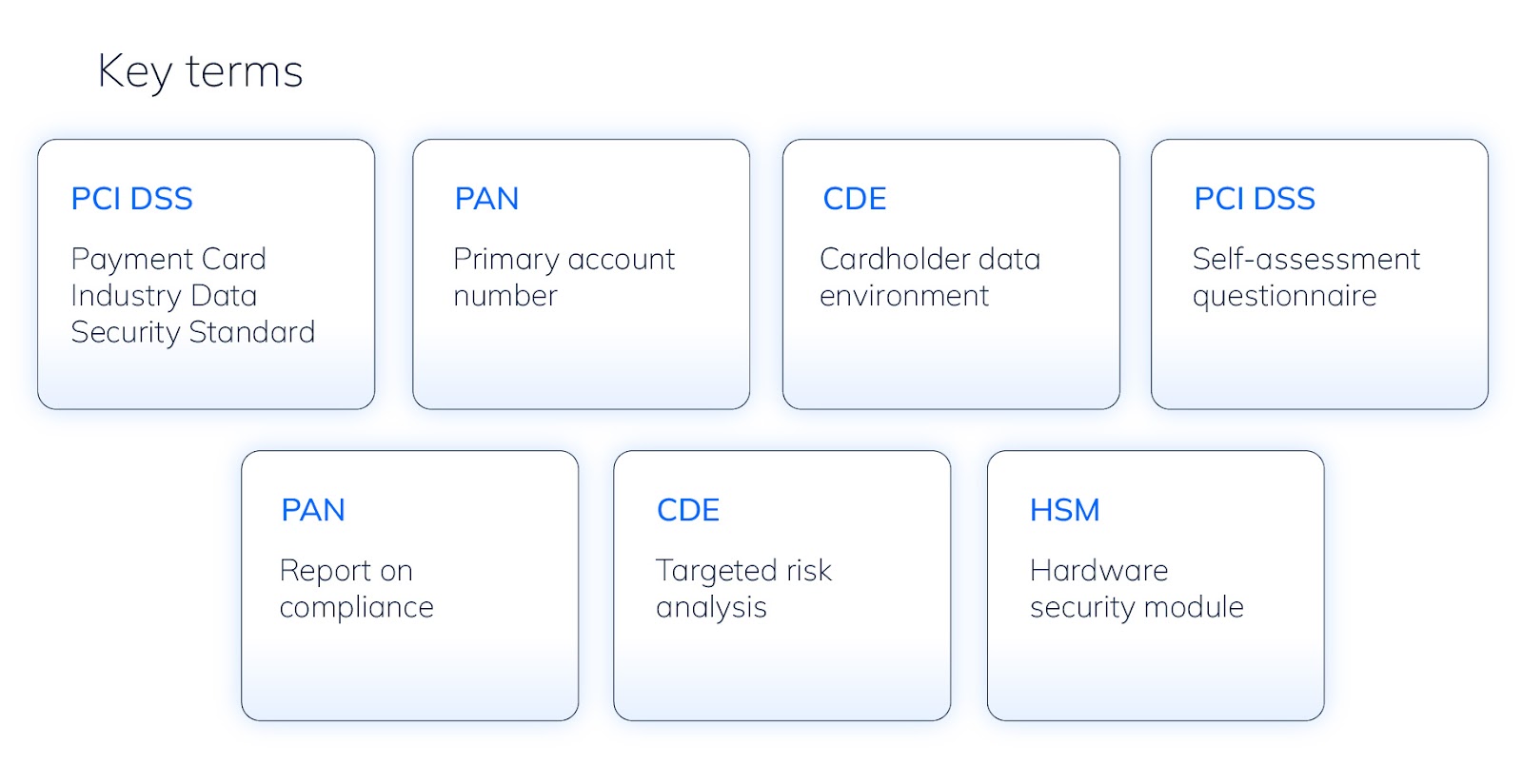

Four shifts matter most:

- Clearer scoping guidance. When handling customer data, merchants must fully map where the Primary Account Number (PAN) can enter, how it flows, and where it might be stored. Even systems that don’t hold PAN directly may fall in scope.

- Stronger ongoing monitoring. Auditors expect continuous evidence: access reviews, change approvals, vulnerability fixes, and incident tracking. Snapshots aren’t enough anymore.

- Targeted risk analysis (TRA). When merchants use alternative controls, they must justify them with a documented risk analysis that explains the choice and how effectiveness will be reviewed.

- Defined vs. customized approach. Requirements can be followed exactly as written, or merchants can prove they meet the same objective differently if they can show equivalent security.

For merchants, these shifts change the compliance conversation. It is less about checking boxes and more about demonstrating active control. Tokenization helps by reducing how many systems ever touch PAN, concentrating sensitive activity in one hardened place, and producing clean logs that make continuous monitoring easier.

The key is to decide early where the defined approach is simplest and where a customized approach fits your architecture better. At the same time, list which evidence can be automated – access logs, approval trails, configuration changes, detokenization events, and incident timelines.

Automating exports can help merchants stay ready for the Self-Assessment Questionnaire (SAQ) or Report on Compliance (RoC) submissions without pausing delivery.

Tokenization: More valuable than ever

One of the biggest challenges in PCI compliance is that PANs have a way of showing up everywhere: databases, logs, analytics tools, even error trackers. Each new system that touches PAN expands the cardholder data environment (CDE) and adds more controls, evidence, and risk.

Tokenization solves this by removing PANs from day-to-day systems. The real PAN is stored in a secure vault, and applications work with a surrogate token that has no value outside the system. Tokens can be used for common tasks like storing a card on file, initiating a charge, or issuing a refund, while detokenization is only allowed under strict policy and is always logged with who requested it and why.

Tokenization vs. encryption

It’s important to distinguish tokenization from encryption. Encryption protects data but still leaves merchants responsible for key management and for securing every system that stores the encrypted payload. Those systems remain in scope. With tokenization, the PAN never enters those systems at all, so the compliance footprint shrinks dramatically. Encryption still plays a role within the vault and during transmission, but most of the environment never touches PAN in the first place.

Different tokens

There are also different flavors of tokens. Processor-issued tokens make it easy to repeat charges with a single provider but can limit flexibility. Merchant-controlled tokens, by contrast, live in the merchant’s vault and can be used across multiple payment service providers (PSPs). This approach keeps routing options open, makes it easier to add or switch PSPs, and enables advanced payment logic – such as fallback, retries, and issuer-specific routing – without expanding scope.

For compliance teams, the value is clear: fewer systems to govern, less evidence to collect, and clean audit trails around the rare cases when detokenization is necessary.

A practical first step is to inventory where PANs live today. Many teams discover unexpected exposures – from server logs from misconfigured form posts, to analytics events with full card fields, or error traces containing request bodies.

Once the inventory is complete, define detokenization policies: who can request it, for what purpose, with what approvals, and for how long access is granted.

See how Vinted gained full token control across 20+ markets

Keep PAN out at the edge: Capture once, tokenize everywhere

PCI DSS 4.0 brings sharper scoping rules. Merchants are now expected to document exactly where PANs can enter, how they flow, and which systems can influence their protection. That makes edge capture more important than ever. If card data enters your environment in the wrong place (even briefly) it can expand the cardholder data environment and pull dozens of systems into scope.

The best defense is to capture card data once and tokenize it immediately. On the web or in mobile apps, this means routing card fields directly from the front end to a secure proxy service. The proxy exchanges the PAN for a token and returns only the token to the application. Back-end systems then process tokens instead of raw card data, keeping them out of scope.

The same principle applies to support and call centers. Agents should never see or type PAN into internal tools. Guided capture flows route card details directly to the vault. When a payment or update is needed, the proxy handles detokenization under policy and logs every sensitive step.

Third-party scripts also take on new weight under PCI DSS 4.0. Analytics libraries, tag managers, or A/B testing tools that can access payment fields may now be considered in scope. Merchants can reduce risk by limiting scripts on checkout pages and isolating payment fields in iframes or segmented DOM elements.

By keeping PAN out at the edge, merchants reduce the number of systems subject to PCI DSS requirements, minimize the blast radius of potential vulnerabilities, and make SAQ and RoQ more predictable.

Practical steps:

- Route web and app form posts directly to the proxy.

- Block PAN from logs and data stores with validators.

- Run secret scanners tuned to PAN patterns to enforce boundaries.

Map tokenization to PCI 4.0 control areas

One of the big shifts in PCI DSS 4.0 is the emphasis on continuous evidence. Auditors now expect merchants to prove – not just once a year, but day-to-day – that controls are working. For many enterprises, this creates a scramble: logs are fragmented across systems, access reviews happen inconsistently, and developers accidentally expose card data in test environments.

Tokenization helps by reducing scope and simplifying how several requirement families are met:

- Data protection. Applications never store primary account numbers (PANs) at rest. A centralized vault encrypts sensitive data and enforces strong authentication, authorization, and segregation. This simplifies key management and reduces accidental exposure.

- Access controls. Detokenization is wrapped in least-privilege workflows. Approvals, time-bound access, and multi-party reviews create a clear chain of responsibility for every event that reveals PAN.

- Logging and monitoring. A vault-plus-proxy model produces consistent logs for token creation, access attempts, policy changes, and detokenization events. These logs form the backbone of PCI evidence, and using the same service across channels avoids the fragmentation that slows audits.

- Secure development. Clean boundaries reduce the risk of PAN leaking into developer tools, test datasets, or crash reports, and they make code reviews easier because fewer modules touch sensitive flows.

To operationalize this, merchants can map vault and proxy events directly to their PCI control matrix. For each objective, define which log lines, approvals, and change records count as evidence, and automate exports so that control owners receive a ready-made package each month. When the auditor arrives, the narrative from policy to implementation to logs is already in place.

Tokenization doesn’t just reduce scope: it creates a foundation for more consistent governance. With data, access, and logging under control, merchants are better prepared to tackle PCI DSS 4.0’s next challenge: defining retention policies, managing encryption keys, and applying targeted risk analysis (TRA) to areas where flexibility is allowed.

Governance essentials: TRA, retention, and key management

PCI DSS 4.0 raises the bar not only for technical controls but also for governance. It asks merchants to show how decisions are made, justified, and enforced over time. Without this layer, even strong technical safeguards can fail in practice.

The challenge for many enterprises is that governance tends to slip into gray areas: too much data is retained “just in case,” alternative controls are applied inconsistently, and no one is sure who owns key management. PCI DSS 4.0 makes this harder to ignore by emphasizing documented analysis and clear accountability.

Three areas deserve early focus:

- Targeted risk analysis (TRA). PCI DSS 4.0 allows flexibility, but merchants must justify it. TRA should be used when a strict control adds friction without improving security. Document the context, the risk, the alternative safeguard, and why it’s sufficient. Assign owners and set a review cadence so decisions don’t go stale.

- Data retention. Keep tokens, detokenization records, and any detokenized data only as long as business or regulatory needs require. Refund policies, chargeback timelines, and local laws differ by region, so align retention with real obligations. Defaults should expire automatically; exceptions should be tracked.

- Key management. Even with tokenization, encryption is still required inside the vault and in transit. Manage cryptographic keys with a hardware security module (HSM). Separate duties so no single person can both create and use keys, rotate keys on schedule and on events, and log every administrative action.

Within this new environment, it’s also a good idea to define a clear “break-glass” process for emergencies. If detokenization outside normal policy becomes necessary, require multi-party approval, time-limited access, and automatic post-incident review. Treat exceptions as learning opportunities and refine policies to prevent repeat cases.

With governance in place, merchants can balance PCI DSS 4.0’s flexibility with confidence, taking advantage of TRA and customized approaches without creating blind spots. The next step is to look at network tokens and account updater services, which combine security benefits with direct impact on revenue.

Network tokens and account updater: security that also converts

One of the hidden costs of payments is lost revenue from expired or replaced cards. For merchants that rely on subscriptions or card-on-file transactions, failed renewals translate directly into customer churn. PCI DSS 4.0 raises security expectations, but merchants also need to maintain conversion and keep recurring payments running smoothly.

That’s where network tokens and account updater services come in. A network token is issued by the card networks and replaces the PAN with a credential that stays valid even if the underlying card changes. When paired with an account updater, these tokens ensure that stored credentials stay current. The practical effect is fewer soft declines, fewer failed retries, and a smoother renewal experience for customers.

Network tokens work best alongside merchant-controlled tokens. In a hybrid model, a merchant token can reference a network token for eligible transactions. This approach preserves routing flexibility while benefiting from network-managed lifecycle updates.

It’s important to note that network tokens do not automatically reduce PCI DSS scope. Merchants must still govern how PAN enters their systems and ensure it is captured through a proxy and immediately tokenized. Network tokens enhance both security and conversion for repeat charges, but tokenization remains the foundation of scope reduction.

A practical adoption plan is to tokenize new cards with network tokens going forward, while selectively backfilling high-value legacy records where the business case is strongest. Track approval rates, retry performance, and customer churn to measure the impact.

Evidence and audit: make SAQ/RoC faster

For many enterprises, the increased emphasis on continuous validation means audits drag on because evidence is scattered across teams, buried in different tools, or only produced at the last minute. The result: product releases stall, and teams spend weeks pulling screenshots instead of shipping features.

The remedy is to standardize what evidence you produce and to generate it on a schedule. Four building blocks make the biggest difference:

- Access reviews. Maintain a clear record of who can request detokenization and who can approve it. Record attestations and time-box elevated access.

- Change approvals. Track changes to the proxy or vault, including configuration updates and policy edits. Capture who proposed the change, who reviewed it, and when it was deployed.

- Incident logs. Keep a timeline that ties alerts, responses, and outcomes together. Where relevant, link incidents to detokenization events for full traceability.

- Detokenization records. Capture which tokens were detokenized, by whom, under which ticket, and for what purpose. Aggregate by service and use case to show patterns.

By automating exports on a monthly schedule, self-assessment questionnaires (SAQ) or Reports on Compliance (RoC) become routine rather than disruptive. Assigning clear owners for each control area ensures nothing is missed, while checklists make coverage explicit. When auditors can trace a control from policy to implementation to logs, review cycles go faster and findings are easier to resolve.

The good news is that making audits easier doesn’t require a big-bang rearchitecture. With PCI DSS 4.0, merchants can take an incremental path. starting with tokenization at the edge, then expanding it across systems, it’s possible can reduce scope and deliver steady improvements without halting business.

Implementation patterns that do not require a big‑bang rewrite

One of the biggest concerns merchants have with PCI DSS 4.0 is that meeting the new standard will require a full-scale rebuild of their payment systems. For enterprises already running complex multi-channel setups, that feels risky and expensive. The good news: it doesn’t have to happen all at once. Tokenization can be adopted in stages, with measurable improvements from the very first step.

A phased approach works best:

- Start at the edge. Tokenize new card captures first. Update web and mobile clients to send card data directly to the proxy and receive tokens in return. This step alone moves most application code out of scope.

- Migrate by risk. Tackle the services that carry the highest exposure: refund handling, payment data syncs, or third-party integrations. Replace PAN payloads with tokens at integration boundaries.

- Retire PAN stores. Backfill tokens into legacy tables and decommission datasets holding PAN. For long-tail cases, shorten retention, restrict access, and apply compensating controls until migration is complete.

- Measure and iterate. Track how many systems remain in the cardholder data environment, how long evidence prep takes, and the volume of detokenization events. Use these metrics to guide the next wave and to communicate progress to stakeholders.

A realistic first milestone is to pick one flow (such as subscriptions or saved cards) for the initial cutover. Prove the model works, measure the scope reduction, then extend the approach to other flows. Each step reduces risk and makes audits lighter without disrupting product development.

This staged adoption paves the way for a broader platform strategy—one where tokenization, governance, and analytics come together in a single operating system for payments.

Conclusion: Shrink PCI scope and keep control

For merchants, PCI DSS 4.0 brings two competing pressures: higher expectations from auditors and the need to keep product delivery on track.

What matters most for compliance is the operational detail. If PCI scope keeps expanding or audit prep is slowing your releases, Payrails can help you get ahead of it – without requiring a big-bang rewrite.

Next steps:

- Map where PAN currently flows and identify where tokenization can replace it.

- Move capture to a proxy so your applications receive tokens only.

- Define detokenization policies and break-glass procedures.

- Automate monthly evidence bundles for access, change, incident, and detokenization logs.

FAQs

What changed in PCI DSS 4.0 for storing and transmitting card data?

PCI DSS 4.0 keeps the core objectives but tightens how you prove control. You’ll see clearer scoping guidance for any system that stores, processes, transmits, or can impact protection of cardholder data. There is stronger emphasis on ongoing monitoring and evidence (such as access reviews, change approvals, incident timelines, and audit logs) rather than point-in-time checks. The standard also formalizes flexibility through targeted risk analysis (TRA) and the option to meet requirements via a defined or customized approach. Practically, this means documenting data flows end-to-end, showing encryption in transit, managing keys properly, and proving your controls work every day, not just at audit time.

How does tokenization reduce PCI scope under PCI DSS 4.0?

Payment tokenization removes PAN from most systems by replacing it with a surrogate token and storing the real card data in a PCI DSS Level 1 vault. When your apps only handle tokens, fewer components sit inside the cardholder data environment (CDE). Under PCI DSS 4.0’s scoping guidance, that reduction directly shrinks the systems and processes the assessor must review. The result is smaller SAQ/RoC effort, tighter blast radius for incidents, and clearer, centralized evidence (access logs and detokenization records) that speeds audits.

Do network tokens count toward PCI DSS scope reduction?

Network tokens improve security and conversion for card-on-file and recurring payments, but they are not a silver bullet for scope. Network tokens help by keeping credentials current and reducing reliance on raw PAN for repeat charges, but if PAN can still enter your environment anywhere, those entry points remain in scope. For scope reduction, pair network tokens with a merchant-controlled tokenization model and edge capture via a proxy so PAN never reaches your applications. Think of network tokens as complementary to tokenization, not a replacement for it.

What’s the difference between SAQ D and a RoC in PCI DSS 4.0?

SAQ D is a self-assessment questionnaire used by merchants or service providers with complex environments or broad acceptance channels. You complete it yourself (often with guidance) and attest to compliance. RoC (Report on Compliance) meanwhile is a formal assessment performed by a Qualified Security Assessor (QSA) or internal security assessor, where allowed. Large or higher-risk merchants are typically required by their acquirer or card brands to undergo a RoC. In practice, SAQ D can be quicker but still demands solid evidence, while RoC is deeper, with interviews, sampling, and control testing. Tokenization and centralized logs help either path by reducing scope and standardizing proof.

How should merchants plan targeted risk analysis (TRA) for PCI DSS 4.0?

Merchants can plan for TRA by treating it as a lightweight but disciplined decision record. Start by naming the control objective and the business context. Describe the risk you’re addressing, including relevant threats, likelihood, and potential impact. Propose your alternative control and explain how it meets the objective. Define measurable safeguards such as access boundaries, monitoring, retention, and response steps, along with the owner, review cadence, and success metrics. Capture the evidence you’ll produce automatically (audit logs, approvals, change history), and schedule periodic reassessment. A consistent TRA template makes customized approaches defensible and repeatable under PCI DSS 4.0.

.png)